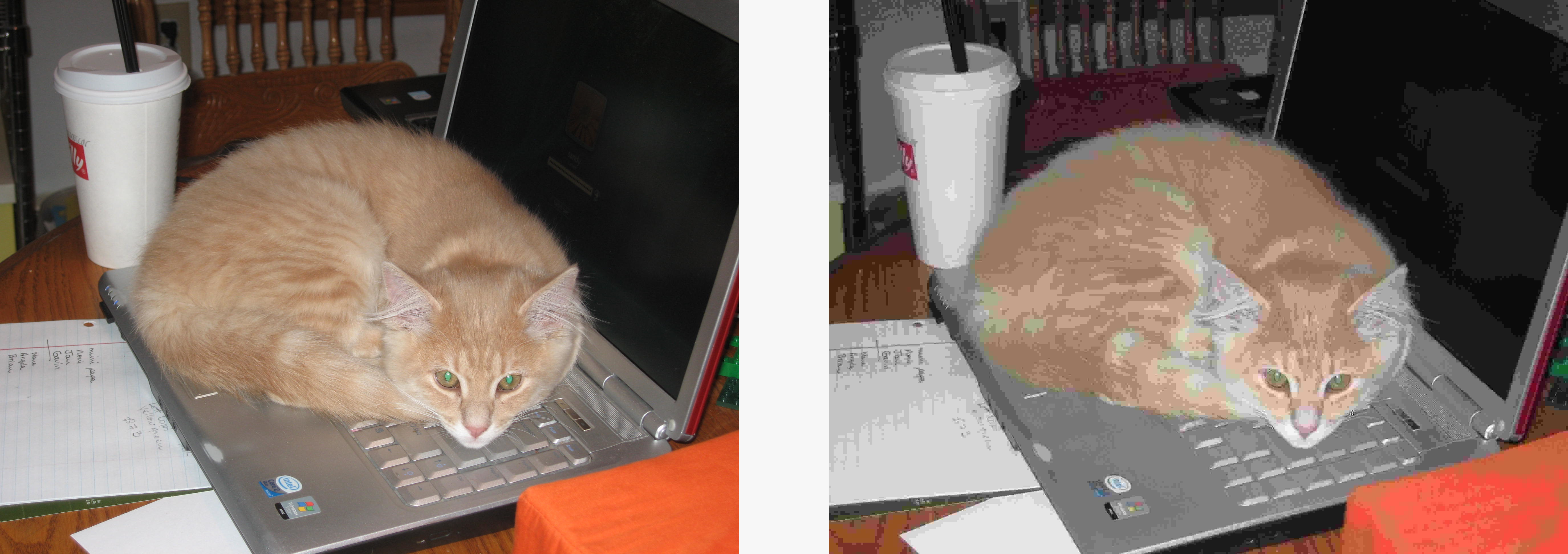

An idea that I had a few days ago on twitter:

image compression algorithm that resizes to the smallest size an AI can still classify the image correctly

So I made a quick demo in python.

Technical Stuff

I started by implementing a quick class that defines an 'evaluator', or

something that classifies an image. Essentially just a wrapper around a

pre-trained model from torchvision.

import torchvision.models as models

from torchvision import datasets, transforms as T

class Evaluator:

def __init__(self):

self.net = models.resnet152(pretrained=True)

# transforms from torchvision docs

self.transform = T.Compose(

[

T.Resize(256),

T.CenterCrop(224),

T.ToTensor(),

T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

]

)

# set model to eval

self.net.eval()

def __call__(self, image):

"""

Returns predicted class for image

"""

transformed_image = self.transform(image).unsqueeze(0)

output = self.net(transformed_image)

_, predicted = output.max(1)

return predicted

This is fairly simple, load a pre-trained model (Resnet152, here for no particular reason.) the transforms from the documentation and set the mode to eval, since we aren't training. Then a quick method that applies those transforms and takes the predicted class as the max.

This maybe isn't the smartest way to approach the predicted class as we will see later, but this isn't a serious problem! It'll do for now.

The other component is the 'compressor'. Used here extremely loosely (is resizing 'compression', in some respects maybe), this implements an iterative method that simply runs an iterative 'compression' method and checks if the evaluator's prediction has changed.

from abc import ABC, abstractmethod

class Compressor(ABC):

def __init__(self, evaluator):

self.evaluator = evaluator

@abstractmethod

def iteration(self, image):

pass

def __call__(self, image):

initial_prediction = self.evaluator(image)

iteration = previous_iteration = image

iter_prediction = initial_prediction

while iter_prediction == initial_prediction:

previous_iteration = iteration

iteration = self.iteration(previous_iteration)

iter_prediction = self.evaluator(iteration)

return previous_iteration

Using compressor as an abstract class means it's easy to implement just the iteration.

from PIL import Image

from random import randint

from io import BytesIO

from compressor import Compressor

class ResizeCompressor(Compressor):

def iteration(self, image):

"""

Returns a resized image that is (at most)

10 pixels smaller in both directions

"""

current_size = image.size

new_size = (current_size[0] - 10, current_size[1] - 10)

# thumbnail preserves aspect ratio

image.thumbnail(new_size)

return image

class JPEGCompressor(Compressor):

def iteration(self, image):

buffer = BytesIO()

image.save(buffer, "JPEG", quality=randint(0, 100))

compressed = Image.open(buffer)

return compressed

In the first case, are we really 'compressing'? Well we are 'encoding information using fewer bits that the original', and it certainly is lossy.

I have also implemented a jpg compressor which maybe is more like what compression actually is. This saves the image with a random quality level, which whilst stochastic, does eventually accumulate the classic overly jpegged look. This means that the final image may look different but does produce cool effects.

Philosophical Questions

This opens a question around compression in general. Whilst formats like JPEG are designed around human perception. What would image compression look like if designed around other things perceptions?

In a world where data is processed by things that aren't human, why should we settle for human-friendly representations of these data? In the same way that JSON and XML might not be the greatest way to move data between services when there's no requirement for human intervention (why use text-based formats when a binary format might make more sense?). Why do we compress images for humans?

Surely there are other formats that similarly get the semantics of an image (or other piece of media as it may be) across without being necessarily human-friendly.

Conclusion

Whilst this scheme for 'compression' is obviously silly, and definitely more artistic than practical, we might consider it as maybe a decent tool for thinking about other ways that we might want to encode semantic information?

In the future, changes could be made to consider the hierarchy of the classes, or operate on thresholds of the changing outputs of the neural network. Currently it changes only when the max class changes which leads to termination where it changes label to something with a very similar semantic meaning (e.g. 'Tiger Cat' to 'Egyptian Cat').

Extra Bits (2022-04-11)

After this made the front page on Hacker News, someone provided me with a nice comment linking to a paper that explores similar ideas, here. It's worth a read!